The official title for this session is “Discovery Tools for Archival Collections: Getting the Most Out of Your Metadata” and was divided into two presentations with introduction and question moderation by Jaime L. Margalotti, senior assistant librarian in Special Collections at the University of Delaware.

The official title for this session is “Discovery Tools for Archival Collections: Getting the Most Out of Your Metadata” and was divided into two presentations with introduction and question moderation by Jaime L. Margalotti, senior assistant librarian in Special Collections at the University of Delaware.

Introduction to Metadata Standards

Michael Bolam, metadata librarian for digital production, is in charge of all the metadata for all the collections at the Digital Research Library at the University of Pittsburgh. He is not an archivist – but does know where the archives is at Pitt! He has put lots of archival material online through digitization and assignment of metadata.

The best definition he has found of metadata, good for all audiences: “Metadata consists of statements we make about resources to help us find, identify, use, manage, evaluate and preserve them” Marty Kurth – Head of Metadata Services, Cornell University Libraries

Reviewed examples of metadata for images, text documents and archival collections. There is also data related to the business of scanning and making content available – administrative/behind the scene. Standards let you take your data and use it for other purposes.

Overview of alphabet soup of metadata standards:

- MARC: bibliographic information in machine-readable form (a MAchine-Readable Cataloging record).

- Dublin Core: the goal of Dublin Core was to create a core set of metadata fields that could be used across platforms, across various disciplines.

- MARCXML: schema for representing MARC in XML. Makes it easy to convert to and from MARC without loosing any data. May have more data than you need. MARCXML is not very ‘human readable’. You need to recall all the code numbers for the different data elements. Can be exported from Archivist Toolkit.

- MODS: Metadata Object Description Schema – sort of a ‘MARCXML light’. Tries to be a step between MARCXML (robust & complicated) and Dublin Core (really simple). May result in compacting multiple MARCXML fields into single MODS fields. May loose some of the granularity of the data. The tags ARE human readable. The tag is the word ‘author’ – not a number. Also can be exported in Archivists Toolkit.

- ONIX: ONline Information eXchange – standard used by the book publishing industry. XML-based standard for making available intellectual property in published form, both physical & digital. Data created by the publisher. They use different ways of representing authors, keywords..etc in comparison to LOC and library cataloging.

- METS: Metadata Encoding & Transmission Standard. XML standard wrapper for describing divergent types of content within a digital library. The metadata for books, images, collections etc keep this data in different formats – METS lets you bring them together.

- OAI-PMH: Not a metadata standard – but rather a protocol for sharing metadata. Gives us a way to pull baseline information about a digital object out of a database and put it out somewhere where it can be harvested and used.

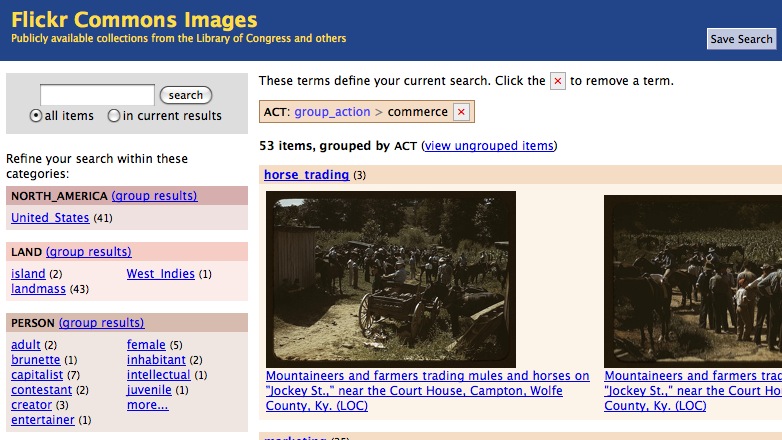

Examples of projects built on shared metadata:

- Worldcat.org: Has everything that is shared with OCLC. They do expose their records to google and yahoo harvesting.

- OAIster: Searches a harvested data set – it is not going live out on the web. The OAIster records are also available in Worldcat. Example: search for Pittsburgh City Photographer (that is a provider of data). Most digitization software will generate an OAIster harvestable version. In his example we see that address and location get compressed into Notes. This is because there is not always a place in Dublin Core that maps to the level of detail you collect at your local institution. http://www.oclc.org/us/en/oaister/default.htm – has the info about contributing your content for crawling.

- Archive Grid: The goal is to pull in finding aids from many sources. It is a service – requires some sort of subscription and payment to see the data. Uses Lucene for searching. The content in Archive Grid is now available in Worldcat. To participate – see http://www.oclc.org/us/en/archivegrid/default.htm

Google and Yahoo do index OAIster and WorldCat, so that is one path to being found in search engines.

MARC Records for Archival Materials in WorldCat Local

Jennifer MacDonald from the University of Delaware presented a cataloger’s perspective of a WorldCat Local environment. She is a “concerned enthusiast” with regard to metadata. The University of Delaware was the first institution to buy WorldCat Local. She ended up on the WorldCat Local Special collections and Archives Task Force. The task force made their final report in 2008 and got a response from OCLC in 2009. They did get some immediate changes based on their feedback – like moving the 520 “summary” data element higher in the display. For some problems the task force identified, such as Archival Materials that were not being identified properly (Internet Resource is the type for all OAI records), it is hard to tell if the issue has been fixed.

She showed some screenshots from WorldCat local to show what data elements are there and how they are organized. In the FirstSearch screenshot (only available at the school), Notes and General Info holds a mishmash of content from various data elements consolidated into single fields. The task force asked for the “Browse” feature but apparently this feature is dead. They got no response from OCLC to this request in their report.

If you use the University of Delaware instance of WorldCat Local to search for walter penn shipley and drill down to the detail record display for the Walter Penn Shipley Papers you will see what was shown during the session. This display is customizable at the institution level in WorldCat Local. Some data is shown. You see lots of Web 2.0 options to add your own data, but the display is missing some of the data from the original MARC record. The full MARC record is indexed for keyword search, but since some of it is not displayed, users may not be able to determine why a record was returned.

Fields missing from the WorldCat Local display:

- 351 – Organization and Arrangement of Materials

- 545 – biographical note

- 506 – restrictions on access

- 540 – Use of materials – with link to an askspec page: http://www.lib.udel.edu/cgi-bin/askspec.cgi

- 525 – preferred citation form – and this is where the manuscript number is

- 655 – some of the parts of the genre terms are missing

- 656 – occupation

OCLC says that they have not included all this because people don’t want this displayed. Given that local organization is already deciding what to show, the task force would prefer the option to displayable all data elements. Due to this missing data, Jennifer prefers the FirstSearch interface – but this option is not always available at all institutions. You should take advantage of the Web 2.0 features. Archivist can create an account on WorldCat Local and add data elements.

Questions and Answers

QUESTION: You talk about having the metadta in a format that is accessible to harvesting. What I have is a bunch of CDs with images on them that have a folder and descriptor structure. Is there a metadata harvester that can go in and pull that metadata out? New York Stock Exchange photographer sent these.

ANSWER (Michael): So the metadata you are looking to extract is the filename and descriptors? You could have someone write a little script and extract what you need. I would hand it to the guy I work with because he writes perl. If then you made that available via your website – then people could find it. To get it into a database – it is just a small script.

QUESTION: Are there any specifically useful webinars/seminars for becoming familiar with these formats for skillbuilding?

ANSWER (Michael): Tons on the web. The LoC websites are very useful. You may have heard the term ‘crosswalking’ – that is where you take one format and turn it into another. Looking at the crosswalks can make it much easier to understand how a format you understand maps to one you are trying to learn about. Shareable Metadata – metadata for you and me. Not online yet – but someone in the audience said the plan is to post the materials. There have been a couple of books and ALA publications. Most of the ones I know of are about 10 years old. Jaime: SAA has a good workshop series.

QUESTION: One of the first things you said was to take data out of EAD and you didn’t go into detail in that. Were you talking about DAO tagged items?

ANSWER (Michael): I was just talking about reusing data in a new environment. For example, we just started digitizing manuscripts and each item is becoming an individual digital object. The only metadata we have is in the EAD finding aid – so we are using that data to make descriptive data about the digital objects. We are going to create a MODS or METS record for every digital object. Jaime: We use EAD to make MODS records. She has been manually extracting EAD data as Dublin Core data for ContentDM.

My QUESTION: What format does OAIster want?

ANSWER (Michael): OAIster is just harvesting Dublin Core. You can share MODS and other metadata types and you may find other aggregators that are expecting their users to work in a more detailed environment. You may publish more data elements for other harvesters as well – but OAIster will only pull the Dublin Core data elements.

QUESTION: We are working on a digitization project to digitize local historical societies, museums and libraries. Might the catalogers be able to deal with MODS or will the loss of granularity be a problem?

ANSWER (Michael): I am not a MODS expert. MARC is very granular. Maybe look at the MARCXML – MODS crosswalk?

QUESTION: At the University of Delaware, do you have any other systems?

ANSWER (Jennifer): When we first got WorldCat Local you had to know the URL to get to the library. That changed fast! The patrons couldn’t find anything. Jaime: In WorldCat Local you cannot scope the search to specific sub-collections.

QUESTION: Thank you Jennifer for your remarks. Is there a problem with catalogers trying to ‘sneak’ data elements into other places – are standards in danger?

ANSWER (Jennifer): I would hope we wouldn’t move 524 data into a 500 field just to get it displayed. There is some danger of loosing the granularity by pushing everything to Dublin Core. I don’t know how real that danger is at this point.

QUESTION: A political question for Jennifer: Who has the clout to push for changes with OCLC?

ANSWER (Jennifer): I think leaning encouraging users to give feedback is important. We were told that users don’t want that “we have proven that users don’t want that”. Users need to make comments about their challenges in dealing with the interface. FROM AUDIENCE: The strongest is to say that you are looking at Sky River. FROM AUDIENCE: Make your data more discoverable outside the catalog world – internal websites and Google. Jaime: We are working hard to make MARC records to push access to our collections. The push is to make the data available in as many locations as possible.

QUESTION: Are these all different levels of subscriptions? Are they trying to push people to buy more subscriptions?

ANSWER (Jennifer): There is a sense that WorldCat Local is pushed at local public libraries. Yes – WorldCat Local is something they have to pay for. Michael: With Archive Grid you are going a step further – EVERYTHING in the finding aid is indexed. Every search I did in there returned thousands of records. Then I filtered by institution – and it never loaded. FROM AUDIENCE: I think they are revamping Archive Grid – but I don’t know how far they are in the process. Michael: I love the detail – you don’t have to dig through other data to find something useful. Depending on the institution – and how they are allowing their data to be harvested – you may see less information. Jaime: You have to actively work with OCLC to get Archive Grid to pick up your data.

QUESTION: We are tinkering with users adding tags – are you having any success with people adding tags?

ANSWER (Jaime): No – it isn’t something we have dealt with. WorldCat Local does let you add stuff like that.

QUESTION: Will OCLC provide that UGC (user generated content) back to the institution?

ANSWER: We wouldn’t know.

QUESTION: Have they provided access to the user studies?

ANSWER: Yes – but it is based on watching individuals use the tools.

Image Credit: Statue representing Research by Henry Hering from image of the interior of the Field Museum of Natural History interior.

As is the case with all my session summaries from MARAC, please accept my apologies in advance for any cases in which I misquote, overly simplify or miss points altogether in the post above. These sessions move fast and my main goal is to capture the core of the ideas presented and exchanged. Feel free to contact me about corrections to my summary either via comments on this post or via my contact form.

Bio:

Bio:

The

The